When Crowdstrike Goes Offline: The Great Global Tech Panic

Picture this: hospitals drop “patient care” on the floor, airlines send out “booking notices,” banks freeze all cards, and government portals go MIA—all because one big cybersecurity firm hit the snooze button. It was the kind of glitch that sent the entire world scrambling for Wi‑Fi and a new emergency plan.

How the Catastrophe Unfolded

- Hospitals: Patient records vanished, and doctors had to decide if they wanted to go back to paper or push for a harder reboot.

- Airlines: Flight schedules went out of sync, likely putting many travelers in a “Where’s my seat?” mood.

- Banks: Accounts froze, Credit cards played hide & seek, and the only thing almost working was a hotline for disembarkation.

- Gov offices: Filing systems stopped, leading to a brief existential crisis for anyone trying to fill a form.

Lessons Learned & What’s Next?

Fast forward a few months and the big players are on a tightrope of “Fix the gate, keep the animals in line.” Here’s what’s happening to make sure the next global tech hiccup stays in the realm of a well‑briefed bug report, not a full‑blown panic:

- Disaster drills now hold a mandatory slot every quarter—banks, airlines, hospitals, and governments rehearse data‑fail scenarios as if they’re auditioning for a survival reality show.

- Double‑check systems are a new industry standard. Every critical service now has redundancy built in—a backup plan that boots up automatically when the first line drops.

- Cyber‑security champions are moving from “reactive” to “proactive.” They’re hunting for weaknesses before anyone else does—think of it as an alarm system that sounds an issue before it turns into a headache.

- Transparency passports are being issued. If a service goes AWOL, endpoints must throw a quick status update, letting users know the project’s rocking the boat and where it sits.

- Hack‑hardened partnerships: CrowdStrike (and its peers) now collaborate on public risk dashboards that instantly flag up overarching problems so everyone can roll out fixes faster.

Despite the chaos, businesses aren’t looking back. The new focus is on human‑friendly resilience—imagine waiting for the next update no longer feels like a waiting game, but a coordinated team effort. The fix? Keep servers humming, steps double‑checked, and—most importantly—stay prepared for the next once‑in‑a‑blue‑sky event. And if a bug happens again, at least now we know how to ride out the storm without the whole world hitting the panic button.

When a Cool Update Turns into a Classic Blue Screen Disaster

Picture this: a routine software patch, a harmless routine, and… BSOD. In late July 2024, Crowdstrike, that supposedly top-tier cybersecurity squad, rolled out its latest Falcon update for Windows. Instead of catching cyber threats like a hawk, it ended up handing east coast and west coast users their very own dreaded blue screen — a massive glitch that stopped roughly 8.5 million PCs from working.

The Quirky Numbers Behind the Chaos

- 8.5 million lost windows out of the Microsoft universe.

- Companies down the line fumble in the billions – a rough estimate sits at $10 billion (or €8.59 billion).

- Tweet‑ready “Who needs an update?” comments across the globe.

Why No One Did a Double‑Check Right Before Going Live?

Steve Sands, an IT pro at the Chartered Institute for IT, told Euronews Next, “No one could see the curtain‑drawn adventure ahead. Nobody wrote a play‑book for a full‑plate suspension of reality.”

There was a big fear that if the happened — as it did — Windows reliant firms would have no contingency. The lesson? Whenever your software does a “mysteriously okay” update, double‑check it to avoid a tech‑world Olympic moment.

What Crowdstrike Gleaned and the Take‑Aways for Others

- The company says they’re earnestly reworking how they roll out patches.

- They’ll introduce sandbox testing that’s as thorough as a thoroughbred inspection.

- Future update plans will include a failsafe rollback that’s basically a “Press and hold ‘z’” button when something goes sideways.

So, next time you hit “install,” remember: better to wing it with a caution flag rather than one‑day, three‑world catastrophe. Stay sharp, folks — and if you’ve got a brilliant backup strategy, that’s a big plus!

‘Round-the-clock’ surveillance of IT environment needed

What This Year’s Outages Are Telling Us About Cybersecurity

Just a year after Crowdstrike hit the headlines, the tech world is still scrambling as Cloudflare, Microsoft’s Authenticator, and SentinelOne trip over glitches that knocked big names like Google Cloud and Spotify offline.

Why the Disasters Keep Happening

- Cloudflare’s own service hiccup in June caused a domino effect that took down Google Cloud and Spotify.

- When Microsoft tweaked its Authenticator app in July, thousands of Outlook and Gmail users went dark.

- A software flaw in SentinelOne wiped out critical network threads, stalling its own operations.

According to Eileen Haggerty, vetting these incidents isn’t the only job—prevention is key. She urges firms to put 24/7 monitoring on full guard, watching over both networks and the broader IT landscape.

Synthetic Testing: A Game‑Changer?

Haggerty’s secret weapon? Synthetic tests—little drama scenes that mimic real traffic before a full‑blown outage erupts. Think of them as practice runs that give teams a sneak peek into potential problems before they hit the roof.

Microsoft’s blog admits that synthetic monitoring isn’t flawless; real-world releases can still shake up the system. Yet it does boost the speed at which problems are corrected once spotted.

Building a Post‑Live Playbook

- After any outage, compile a detailed “why‑did‑this‑happen” dossier.

- Include resilience plans and recovery steps that highlight any external dependencies.

- Integrate these insights early in the build process—adding them later is like patching a leak with a coffee tin.

Many firms update their incident plans post‑event, but Nathalie Devillier warns that the memory of short‑term chaos can fade quickly, leaving them largely unprepared when the next crisis pops up.

Keeping It All in Europe

Devillier further argues that cloud and IT security solutions should stay within the EU to avoid foreign tech that might destabilize local infrastructure daily.

In short, the line of defense is thickening—but only if you treat it as a living organism that evolves, practices, and remembers every setback.

What has Crowdstrike itself done after the outage?

CrowdStrike Gets a “Self‑Healer” Feature

What’s new? CrowdStrike’s latest blog post this month throws a clever twist at its own tech: a self‑recovery mode. Think of it like a health check for your servers – it spots the classic “crash‑loop” syndrome and, without a human hand, gracefully lifts systems into a safe‑mode. No more waking up to a dozen crashed computers.

Testing the Update Dance

One neat trick the company’s added is a new interface that gives admins a bit more control freak freedom. Instead of all the machines updating at once (cue the kill‑switch chaos), you can set stealthy schedules for test and critical setups. Slim can specify who needs the update first, who waits for that lonely night‑time patch, and who watches from the sidelines.

Pin the Content Like a Ninja

Ever wished you could lock a game version or a policy document and keep it stubbornly in place until you’re ready? CrowdStrike’s content pinning cuts that wish into reality. The system lets you choose exactly when and how updates roll out – a kind of “hurry‑up or hold‑on” rock‑star power.

Digital Operations Center: 24/7 Eyes on the World

Now there’s a Digital Operations Center (DOC) giving the team a scan of their global “daisy chain” of computers. The office claims it’s all about deeper visibility and faster response. Imagine having a GPS for every device – you’ll know the exact point of failure before the rest of you.

Code, Quality, & Reviews – The Triple‑Threat

- They run regular code inspections to spot bubbles.

- Quality processes are tweaked all the time.

- Operational procedures get a fresh look so no crisis surprises them.

CEO Makes a Big Statement

George Kurtz, the CEO, posted on LinkedIn: “What defined us wasn’t that moment, it was everything that came next.” He also added that the firm now humbly rests on resilience, transparency, and relentless execution. The message is clear: adversity was just the lightning that led the team to fix the wiring.

Complexity & Resilience Reality Check

Obviously, no silver bullet turns every outbreak into a distant memory. Sands – an expert columnist – points out that computers and networks are inherently complex, with a web of dependencies that doesn’t obey programming heroes.

“We can certainly improve the resilience of our systems from an architecture and design perspective … and we can prepare better to detect, respond and recover our systems when outages happen,” he says.

Bottom line? CrowdStrike’s fresh tools and crew‑stronger mindset paint a hopeful picture. They’re not erasing downtime, but turning it into a learning library that may well keep future outages from being a fire‑flood drama. With a self‑healer, test scheduling wizardry, content pins, and a 24‑hour DOC, the company’s pulling theCrowdStrike funds. (Catch the potential internet drama in the related section: “CrowdStrike sued by shareholders over massive IT outage.”)

Young learners gaining practical experience in computer graphics at the vocational training center.

Young learners gaining practical experience in computer graphics at the vocational training center. Students participating in a welding internship to gain real-world experience

Students participating in a welding internship to gain real-world experience

Spectators wear hats to protect from the sun during the Wimbledon Tennis Championships in London, Monday, June 30, 2025.

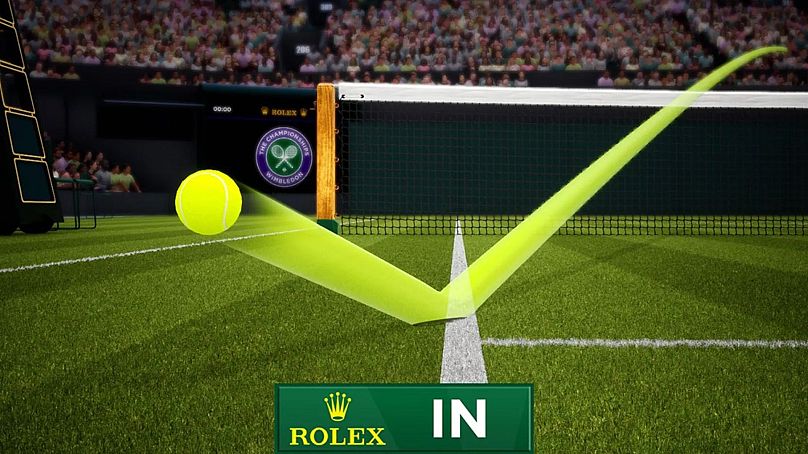

Spectators wear hats to protect from the sun during the Wimbledon Tennis Championships in London, Monday, June 30, 2025. Image depicts the technology

Image depicts the technology

Sure thing! Could you please share the article you’d like me to rework?

Sure thing! Could you please share the article you’d like me to rework?